February 13, 2023

This is a first draft of the first part of a longer note on AI.

This is not the promised briefing note on ChatGPT and how it will affect work.

AI Hype

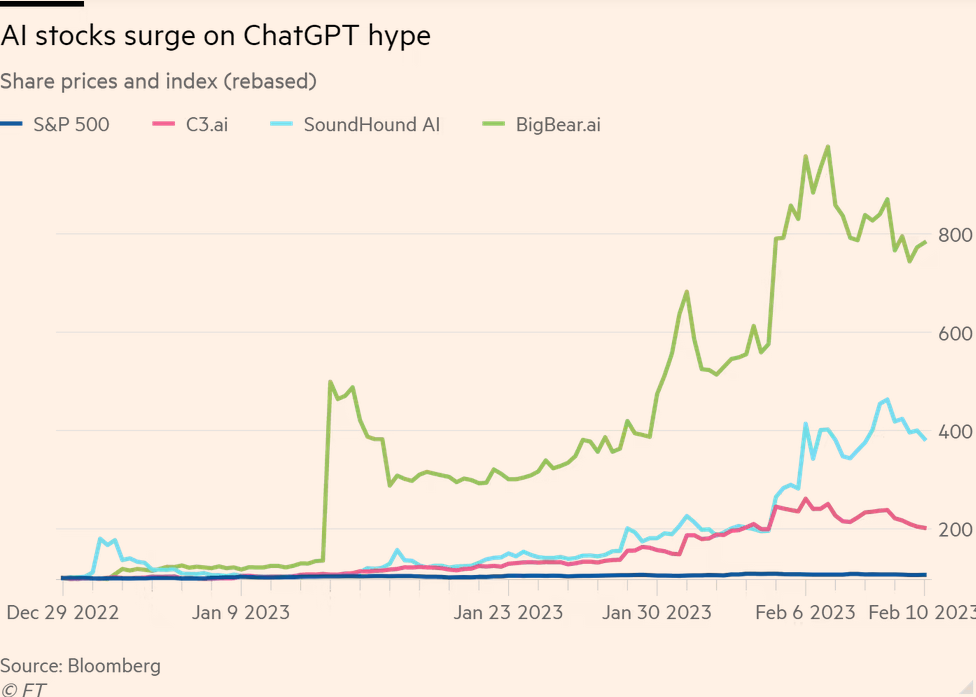

- A speculative bubble has formed around generative AI companies and their tools (like OpenAI and ChatGPT).

- This investment bubble was partly OpenAI's goal in the way ChatGPT was launched: it is has been a hype campaign ad for the tool.

- The hype is somewhat deserved as it is a new(ish) technology that will change the way some work is done.

- What is not being considered are the limitations of the tool and the specific areas generative AI will likely become dominant.

ChatGPT in particular has caught the public’s attention, drawing around 100mn monthly active users in January after launching the previous month, according to UBS.

“In 20 years following the internet space, we cannot recall a faster ramp in a consumer internet app,” the bank said. “It took TikTok nine months after its global launch to add 100mn incremental users and it took Instagram 2.5 years.” (FT)

Along with speculative investment bubble, there are going to be wild short-term swings in stock prices of companies when they make mistakes that either do not matter or show the natural limitations of generative AI.

One such "mistake" includes Google's first ad for Bard (its Microsoft Bing/ChatGPT competitor for web search). Google was punished for exposing the true nature of generative AI in a classic "there's no truth in advertising" fashion:

Alphabet’s shares fell almost 8 per cent after Bard last week produced an ambiguous answer to a question about pictures taken by the James Webb Space Telescope.

AI Makes Mistakes

Generative Pre-trained Transformers (GPT's) make mistakes.

It is actually these mistakes that makes GPT models bad at what Microsoft and Google is trying to do (provide accurate answers to questions) and very good at what they are going to be used for: entertainment and automation of some business/consumer processes.

Some level of mistakes (and not being able to tell the difference) is what makes the content created by GPT seem almost creative. It is the "creativity" of making random mistakes and then leaning into those mistakes in confident ways that make some answers extremely entertaining.

For example, it is unlikely a system that self-corrects, could create a new song in the style of your favourite pop artist.

This is one of the most important things to know about GPT models: they are statistical models and can only give you an approximate answer.

What is GPT?

- GPT

- Generative Pre-trained Transformer

Generative in that it creates something new and expands on that "new" thing.

Pre-trained in that it "trains" itself on a giant database that you give it without need for extensive manual tagging and categorization of that data. (Though, all these models require massive human intervention even after it is "pre-trained").

Transformer in that it takes information that it has and transforms that information (words, numbers, etc.) into an associative matrix to be able to output something coherent.

GPT is a fancy statistical model. As such, it is making a best guess using the data it has access to and that guess is not going to be correct a lot of the time.

GPT takes words and finds their context by finding other words near that word in its massive database of sentences. It can then build "new" sentences using that information and expand by building on those words and phrases it has hobbled together in that previous step.

Beyond the algorithm, it is a very large, multi-dimensional database. Just like all AI is basically a computer searching and building databases of relational data.

The magic comes from the Natural Language Processing (NLP) that is used to write the sentences that sound human. These NLP are based on complex linguistics algorithms and the word-association database.

All these models can do is expand (which includes "summarize") on a word or phrase you give it and its associations in the database.

Let's use an example:

Take the word "books" and type it into ChatGPT.

It will use a natural language process to write along paragraph telling you about "books".

How?

It finds information about "books" by searching its database for the word "book". It marks words that are "close" to the word book and counts them. The more similar close associations it finds of other words near "book", the higher rank it gives those words and phrases in its model.

The data in the database it is searching in is made-up of sentences gathered mostly from the internet. Sentences written by people that already (mostly) make sense. So, the more times it sees (for example) "The Iron Heel" and "book" close together, the more it guesses that "The Iron Heel" is a book. Other words are also associated with "The Iron Heel" and it can build a web of words associated with those new associated words. And so on until it has a very large web of words associated with each other.

This word association web will allow it to produce a sentence on the history of "books" and some examples of "books". The GPT will then expand on "books" and "history" using associations between those two words to write a longer paragraph about "history" and "books". And so on, until it has written a length of writing considered adequate.

It might even talk about The Iron Heel, Jack London, or something about writers generally gleaned from writings about Jack London.

This "word association" also applies to more complex phrases as the model database grows and "relationships" or distances between words are identified.

It is the "nearness" calculation between words and phrases that makes the GPT (and the natural language processing) quite good at summaries and "expanded" explanations of a topic. It just builds on the previous topic with "nearby" topics.

However, it also means that it will give wrong answers because it will always be able to find associations between words—even if that association is incorrect. And, it always gives an answer because the model has no way of telling if its answer is correct or not.

In some respects, much of what humans do is either a summary of concepts or an expansion of understanding of a complex term or idea when they talk to each other. This is why it seems "smart" at some things because its database is larger than your memory in some areas.

However, unlike human brains, AI models are just statistical models. They are only able to put things together that appear close together in the database. Databases of imperfectly constructed documents originating from imperfect people.

Even as the database gets larger and better, it will continue to have these inherent limits. And, in some ways, larger databases are all it can really do right now. And, there are real limits to the size of database it can use.

Limitations

GPT models can only re-order data. They cannot add analysis or even tell if the answer is false.

Understanding the limitations and where it excels is important to analysis of where it will affect, enhance, and harm current work, communications, understanding, and social interactions.

Important to understanding limitations of this tool include understanding the ownership structures, development processes, and physical limitations of processing large datasets of GPT models.

These all limit the implementation, use, and to a large extent the ability of the GPT ecosystem to become "better" at answering questions.

There are physical, economic, and technical limitations to the model in addition to the limitations of the statistical model described above.

OpenAI is for-profit. Its technology is proprietary, including the underlying technology of ChatGPT called GPT-3(.5).

Open source versions of the proprietary GPT-3 models are available. But, as with most non-capital backed digital infrastructure, they are not as easy to use for consumers.

Open source alternative such as GPT-J & GPT-NeoX (which are arguably more powerful than GPT-3) are significantly cheaper to run if you can find a powerful enough server infrastructure because you are not paying the (monopoly) license fees.

Some services are starting to pop-up that will run these models, but this will be a niche market tailored to specific use-cases. The limitation is how powerful a computer you need to run GPT.

Infrastructure

GPT servers make heavy use of GPUs (graphics processing units) compared to your desktop computer or server which use CPUs (general computing Central Processing Units). The reason for this is the type of calculations they are doing.

Similar to 3D graphic rendering, GPT and other AI statistics is complex math dealing with large matrices of information.

Why does this matter? GPUs are expensive when compared to CPUs.

GPUs have fewer companies producing them, are more "closed" in the software that can access their proprietary components, they are used in crypto currency mining, and are made primarily for high-end gaming platforms.

This physical constraint on access to GPUs is an important limitation on the growth of GPT-type usage. As an example, ChatGPT is run on OpenAI's infrastructure built by Microsoft.

OpenAI's computer is the 5th largest supercomputer in the world. It has 285,000 CPUs, 10,000 GPUs operating on bandwidth of 400Gb per second per GPU.

Microsoft has spent billions building this infrastructure. And it has given OpenAI an additional $1B to spend by 2025 on server development.

You cannot buy this stuff off the shelf. And, it is actually hard to even rent access to this kind of infrastructure.

The "innovation" of the new OpenAI GPT models (GPT-3) is acually just the size of the database it is "self training" from and the ability to use massive computers to process that data. GPT-3 is a lot better than GPT-2 (which you might not have heard of), but only because the database was bigger and the computer doing the math was faster.

There are some obvious and some not-so-obvious problems with this as a general-use tool. We will discuss those later in the week.